CERLab: Activity Tracker

Problem

Developing an efficient and automated pipeline to understand construction site activities expressed in terms of human-object interactions will greatly impact the construction and manufacturing industry. With the interactions identified, the safety of activities on the site can be evaluated effectively with rule-based heuristics or data-driven metrics.

Understanding human-object interactions using computer vision techniques has been a popular topic in the industry. Existing frameworks work with images and point clouds to perform low-level feature extraction and object identification, then infer relationships among the identified objects.

Liuyue Xie, Tomotake Furuhata, Kenji Shimada, et al. "Learning 3D Human Object Interaction Graphs from Transferable Context Knowledge for Industrial Sites," IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023

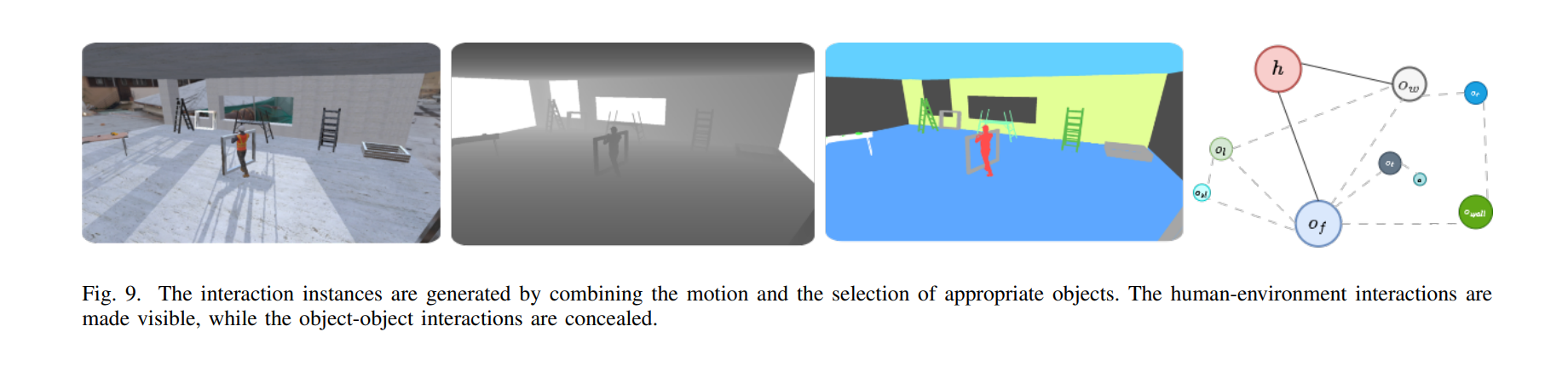

3D Synthetic Scene Graph Data Generation

Why Synthetic?

- Computer vision to train HOI detection and segmentation requires mass data to train on.

- The application for this project's HOI detection places a tight focus on interactions special to construction site activity.

- Pre-existing specific construction site HOI data is scarce, expensive and inefficient to create at a large enough scale through physical footage

The synthetic data generation project aims to simulate construction site activity, digitally, using 3D models and animations using software like Unity to give the research team full control over variability in the scene and strengthen the utility of the training data set

Unity + Python + Blender

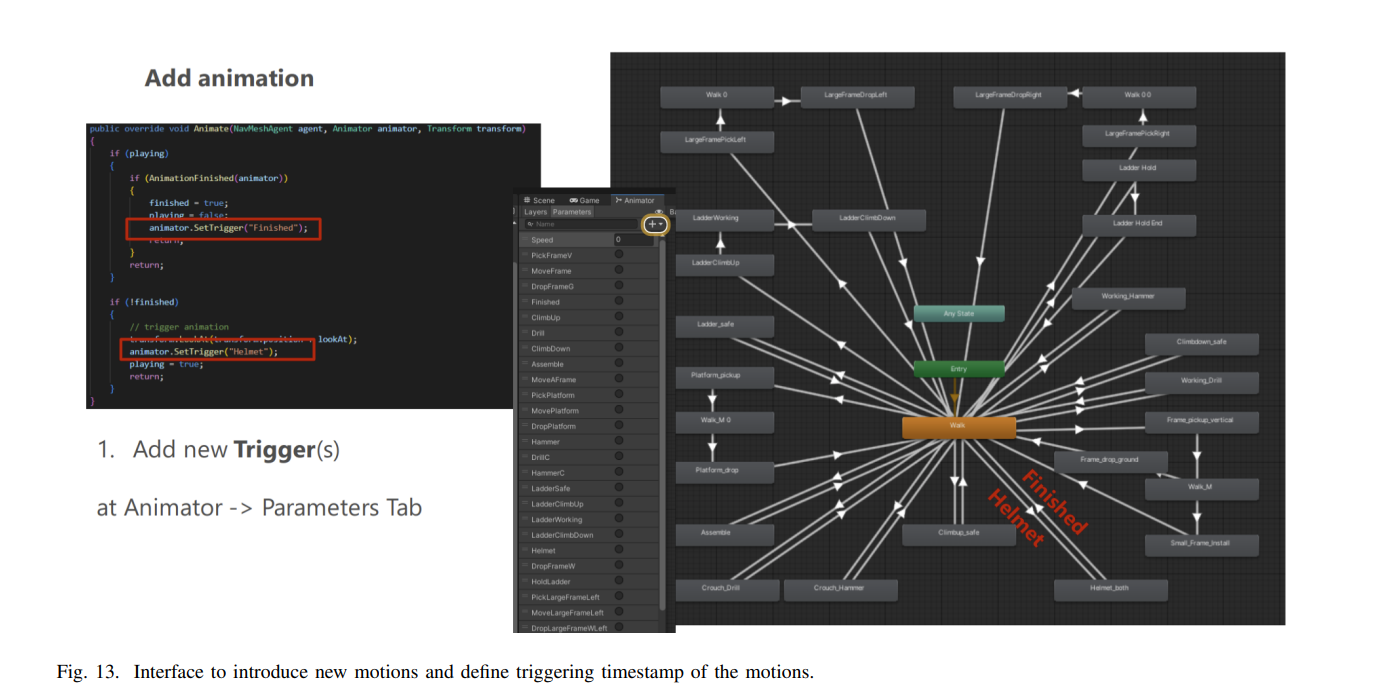

I programmed a script using the Blender Python module (this choice was done purposefully due to Blender's more intuitive modeling and animation capabilities than Unity) to take in motion captured data (human movement mapped on to the skeleton of a 3D model) and output a series of variants that are automatically uploaded into Unity

The script would first randomly modify the proportions of the base human model using the SMPL-X add-on, this is to provide us a wide range of human body types to work with and account further reinforce the training data to cover more randomness. The animations are recorded using our lab's motion capture system, movements related to tasks such as picking up, moving, and assembling a window frame are captured and the keyframe data is imported. The animation is then transferred onto the human model skeleton, and the keyframe curves are then altered to produce animation variants.

In addition, I also programmed a script that would automatically wrap and fit clothing models onto the worker model as the bodily proportions are randomized, a task that required careful tuning to prevent any geometric artifacting.

I was also tasked with the assignment of cleaning up and texturing the object models on the Unity scene.

Animation Variation Script Demo